MIT researchers invent simulation system to train driverless cars to navigate worse-case scenarios

March 25, 2020 | AUVSI News

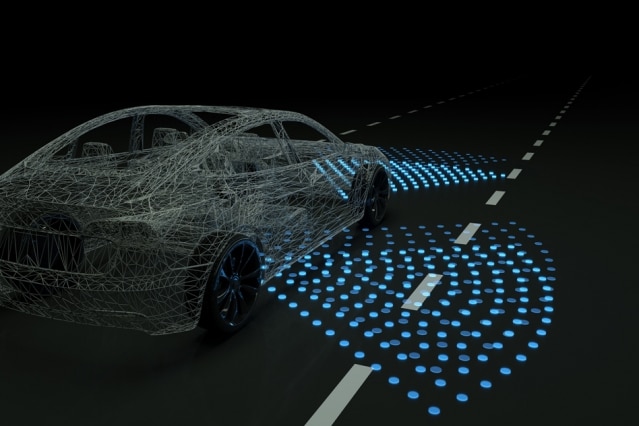

To help driverless cars learn to navigate a variety of worse-case scenarios before they begin operating on real roads, researchers at MIT have invented a simulation system to train driverless cars that creates a photorealistic world with “infinite” steering possibilities.

According to the researchers, control systems—also known as “controllers” —for autonomous vehicles largely rely on real-world datasets of driving trajectories from human drivers. The vehicles use this data to learn how to emulate safe steering controls in different situations. Researchers note, though, that real-world data from hazardous “edge cases,” such as nearly crashing or being forced off the road or into other lanes, are rare.

By rendering detailed virtual roads to help train the controllers to recover, some computer programs—called “simulation engines” —seek to imitate these situations, but the learned control from simulation has never been shown to transfer to reality on a full-scale vehicle, the researchers explain.

To address this issue, the MIT researchers use their photorealistic simulator, called Virtual Image Synthesis and Transformation for Autonomy (VISTA), which uses only a small dataset—captured by humans driving on a road—to synthesize a practically infinite number of new viewpoints from trajectories that the vehicle could take in the real world. Being that the controller is rewarded for the distance it travels without crashing, it must learn by itself how to reach a destination safely, and in doing so, it learns to safely navigate any situation it encounters, including regaining control after swerving between lanes or recovering from near-crashes.

During tests, a controller trained within the VISTA simulator safely was able to be safely deployed onto a full-scale driverless car and to navigate through previously unseen streets. The controller was also able to successfully recover the car back into a safe driving trajectory within a few seconds after a car was positioned at off-road orientations that mimicked various near-crash situations.

“It’s tough to collect data in these edge cases that humans don’t experience on the road,” says first author Alexander Amini, a PhD student in the Computer Science and Artificial Intelligence Laboratory (CSAIL).

“In our simulation, however, control systems can experience those situations, learn for themselves to recover from them, and remain robust when deployed onto vehicles in the real world.”

According to the researchers, historically, building simulation engines for training and testing autonomous vehicles has been largely a manual task, as companies and universities often task teams of artists and engineers to sketch virtual environments, with accurate road markings, lanes, and even detailed leaves on trees. Based on complex mathematical models, some engines may also incorporate the physics of a car’s interaction with its environment.

Since there is no shortage of things to consider in complex real-world environments, researchers say that “it’s practically impossible to incorporate everything into the simulator.” As a result, there’s usually a mismatch between what controllers learn in simulation and how they operate in the real world.

With this in mind, the MIT researchers created what they call a “data-driven” simulation engine that uses real data to synthesize new trajectories consistent with road appearance, as well as the distance and motion of all objects in the scene.

First, they collect video data from a human driving down a few roads and feed that into the engine. For each frame, the engine projects every pixel into a type of 3D point cloud, and afterwards, they place a virtual vehicle inside that world. When the vehicle makes a steering command, the engine synthesizes a new trajectory through the point cloud, based on the steering curve and the vehicle’s orientation and velocity.

To render a photorealistic scene, the engine then uses that new trajectory. It does this by using a convolutional neural network — which is commonly used for image-processing tasks — to estimate a depth map that contains information relating to the distance of objects from the controller’s viewpoint. The engine then combines the depth map with a technique that estimates the camera’s orientation within a 3D scene, and this all helps pinpoint the vehicle’s location and relative distance from everything within the virtual simulator.

Based on that information, it reorients the original pixels to recreate a 3D representation of the world from the vehicle’s new viewpoint. It also tracks the motion of the pixels to capture the movement of the cars and people, and other moving objects, in the scene.

“This is equivalent to providing the vehicle with an infinite number of possible trajectories,” says Daniela Rus, director of CSAIL and the Andrew and Erna Viterbi Professor of Electrical Engineering and Computer Science.

“Because when we collect physical data, we get data from the specific trajectory the car will follow. But we can modify that trajectory to cover all possible ways of and environments of driving. That’s really powerful.”

Traditionally, researchers have been training autonomous vehicles by either following human defined rules of driving or by trying to imitate human drivers, but they make their controller learn entirely from scratch under an “end-to-end” framework, which means it takes as input only raw sensor data—such as visual observations of the road—and, from that data, predicts steering commands at outputs.

“We basically say, ‘Here’s an environment. You can do whatever you want. Just don’t crash into vehicles, and stay inside the lanes,’” Amini says, which requires “reinforcement learning” (RL).

Reinforcement learning is a trial-and-error machine-learning technique that provides feedback signals whenever the car makes an error. In the researchers’ simulation engine, the controller starts off not knowing anything about how to drive, what a lane marker is, or even other vehicles look like, so it starts executing random steering angles. Only when it crashes does it get a feedback signal. At that point, it gets teleported to a new simulated location and has to execute a better set of steering angles to avoid crashing again. Over 10 to 15 hours of training, these sparse feedback signals help the vehicle learn to travel greater and greater distances without crashing.

After successfully driving 10,000 kilometers in simulation, the authors apply that learned controller onto their full-scale autonomous vehicle in the real world. According to the researchers, this is the first time a controller trained using end-to-end reinforcement learning in simulation has successful been deployed onto a full-scale autonomous car.

“That was surprising to us. Not only has the controller never been on a real car before, but it’s also never even seen the roads before and has no prior knowledge on how humans drive,” Amini says.

Since the controller was forced to run through all types of driving scenarios, it was able to regain control from disorienting positions, such as being half off the road or into another lane, and steer back into the correct lane within several seconds.

“And other state-of-the-art controllers all tragically failed at that, because they never saw any data like this in training,” Amini says.

The researchers hope to next simulate various types of road conditions from a single driving trajectory, such as night and day, and sunny and rainy weather. They also hope to simulate more complex interactions with other vehicles on the road.

“What if other cars start moving and jump in front of the vehicle?” Rus says. “Those are complex, real-world interactions we want to start testing.”

The work was done in collaboration with the Toyota Research Institute.

- Industry News